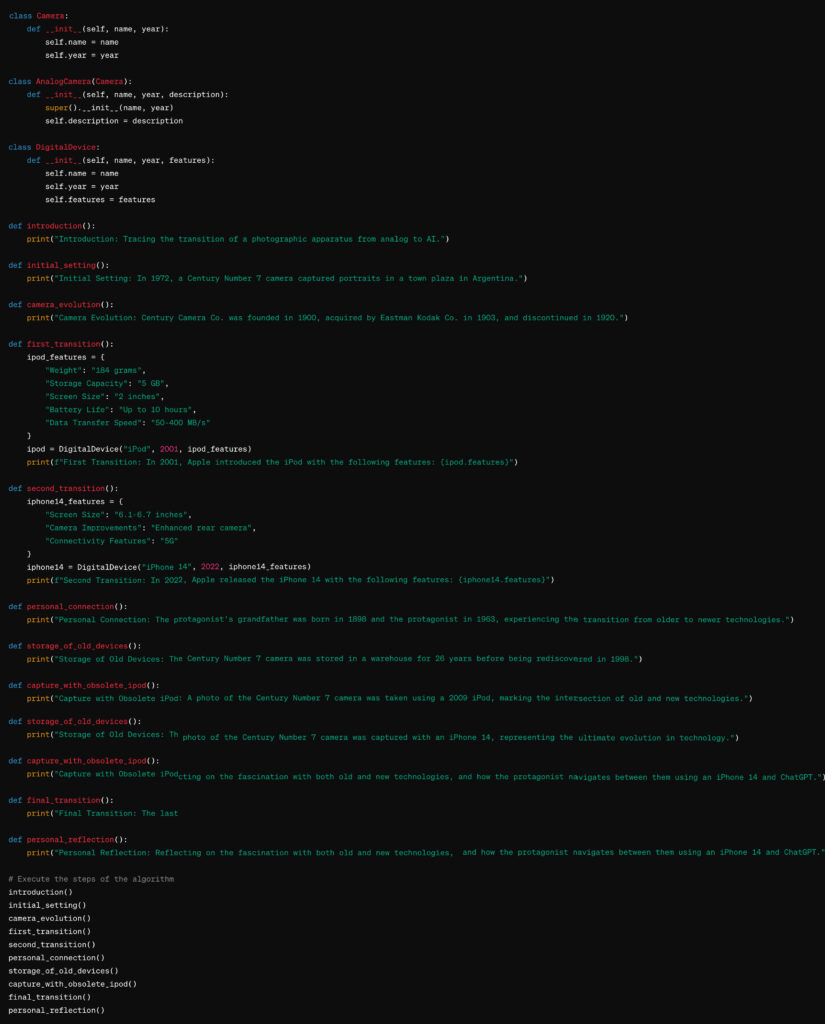

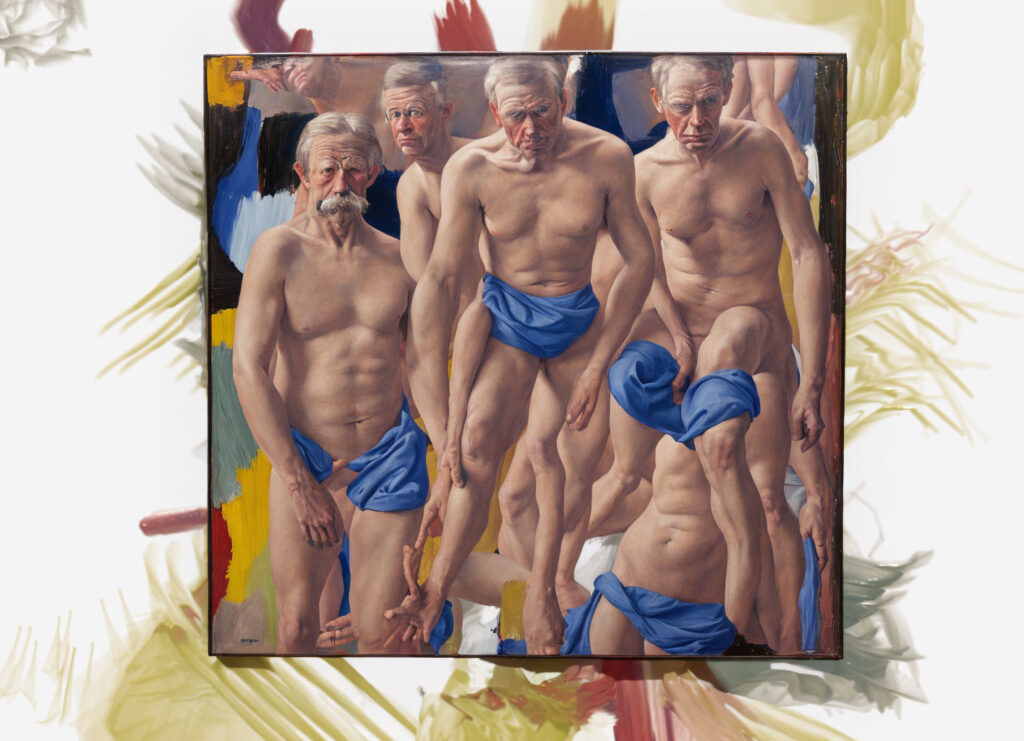

Marcello Mercado, The algorithm, 2024, Process art – New media art

The Algorithm

2024

Process Art – New Media Art – Photography

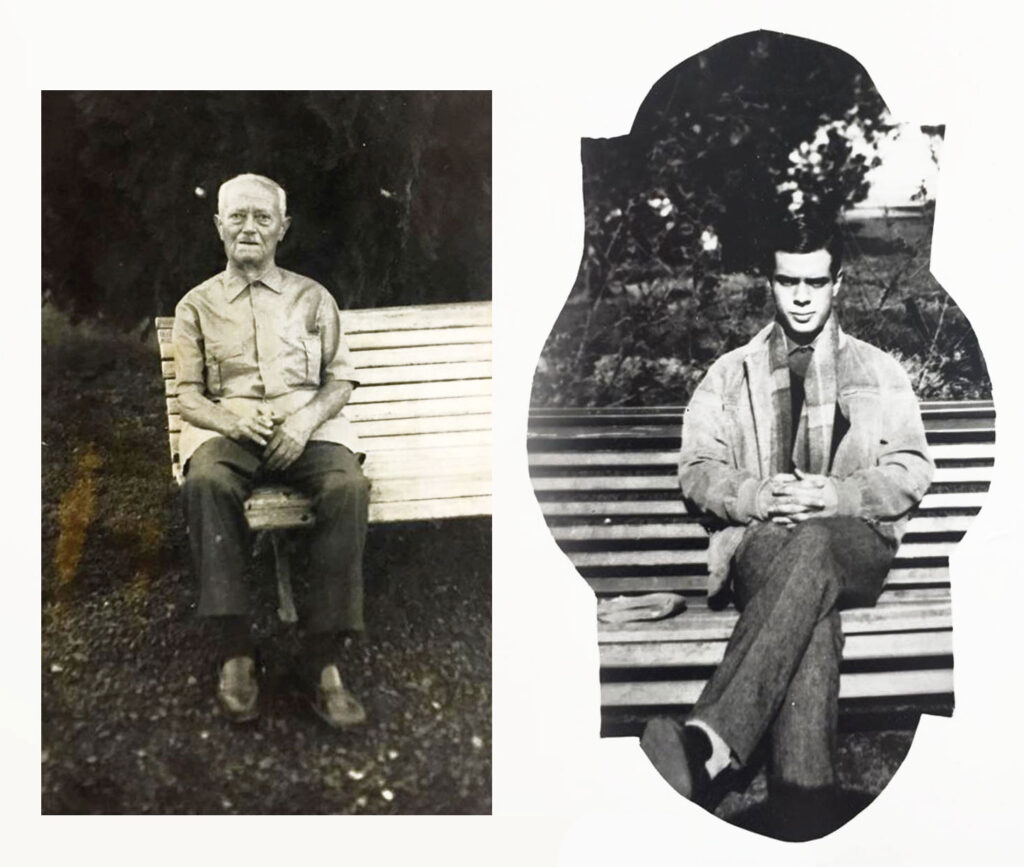

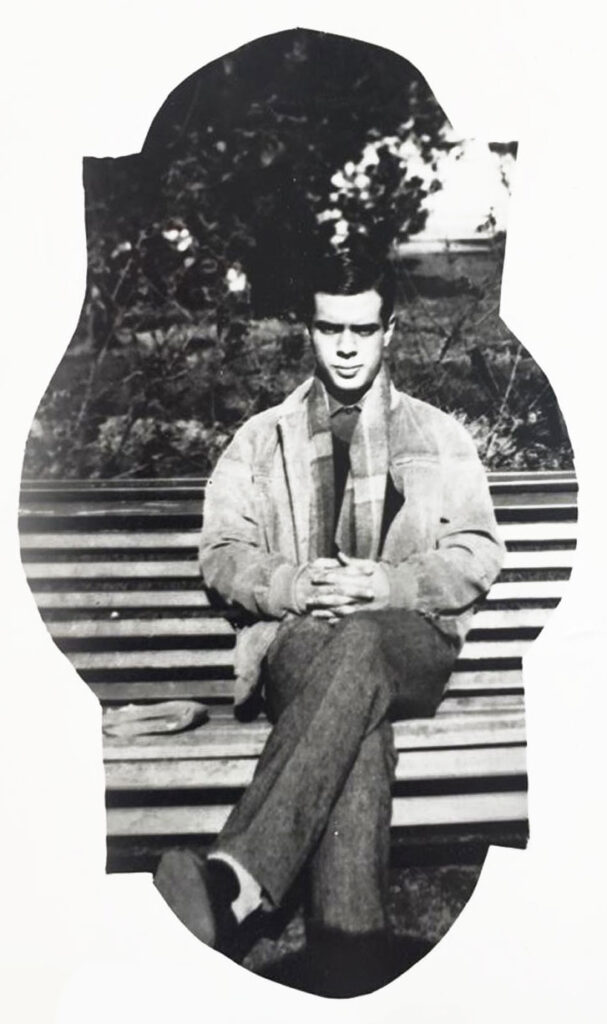

In 1972, a Century Number 7 camera took a portrait of my maternal grandfather in a town square in northern Argentina. In 1980, the same camera made a portrait of me. My grandfather was already dead. This time, the photographer gave my portrait a vintage mask, giving the impression that it was an older photo than the one my grandfather took in 1972.

In 1998, I was able to recover the remains of that camera, which had been stored in a warehouse for 26 years.

This year, 2024, I took a photo of the remains of that century number 7 camera with an obsolete 2009 iPod, and finally took the last photo of the bellows camera with an iPhone 14.

This process project aims to build an algorithm that chains the transition from a physically very heavy analog photographic recording device to an AI language model. The evolution and story through three cameras and a language model that builds an algorithm on demand.

Two portraits taken with the same Century Number 7 camera in a town square in northern Argentina, in 1972 and 1980.

02. Detail of my portrait taken by a Century Number 7 camera in 1980. A vintage mask was used that gives it an aged appearance compared to the photo of my grandfather taken 8 years before.

Remnants of the rescued Century Number 7 camera that took my grandfather’s portrait in 1972 and my portrait in 1980. (iPhone 14 photo)

Here are some of its key features:

Film Format: The Century Number 7 camera was designed to use large format film, usually in sizes such as 4×5 inches or 8×10 inches. This made it possible to capture high resolution images with a high level of detail.

Folding design: As the name suggests, this camera had a folding design that allowed it to be collapsed for easy transportation and storage. When folded, the camera became more compact and portable, making it ideal for photographers who needed mobility.

Lens Focusing: The Century Number 7 typically came equipped with a high-quality focusing lens that offered manual focusing options as well as the use of an extendable bellows. This allowed the focal length to be adjusted to achieve optimal sharpness in the image.

Rugged construction: Large-format cameras such as the Century Number 7 were often constructed of durable materials such as wood and metal, giving them a sense of solidity and stability. This ruggedness contributed to the camera’s longevity and ability to withstand the rigors of outdoor use.

Tripod Mount: Due to its nature and size, the Century Number 7 would typically be mounted on a tripod to ensure stability during photography. Tripods provided solid support and allowed the height and angle of the camera to be adjusted to suit the potographer’s needs.

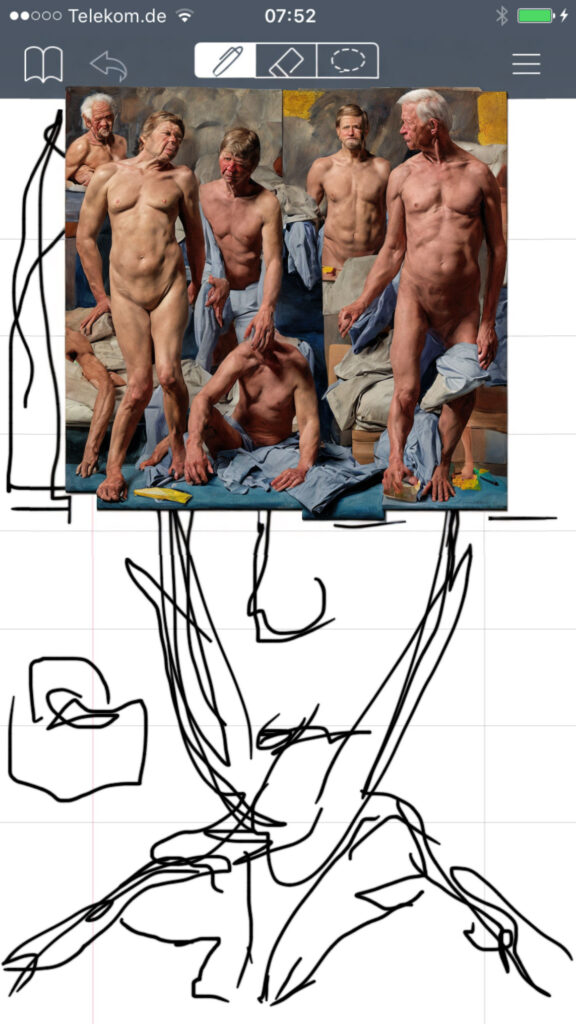

A 2009 version of the iPod took an image of the century number 7.

However, in September 2009, Apple released the fifth-generation iPod nano with a built-in video camera. Here are the key features of this camera:Resolution: The camera on the fifth-generation iPod nano had a video resolution of 640 x 480 pixels (VGA) at 30 frames per second.

Storage capacity: The iPod nano allowed you to record video directly on the device and store it in its internal memory, which varied depending on the model’s capacity (8GB or 16GB).

Video format: Videos were recorded in H.264 format and saved as .MOV files.

Other features: The iPod nano’s camera could take still photos at a resolution of 640 x 480 pixels. It also offered basic video editing capabilities right on the device.

Picture of the century number 7 camera taken by a 2009 version of the iPod

06.

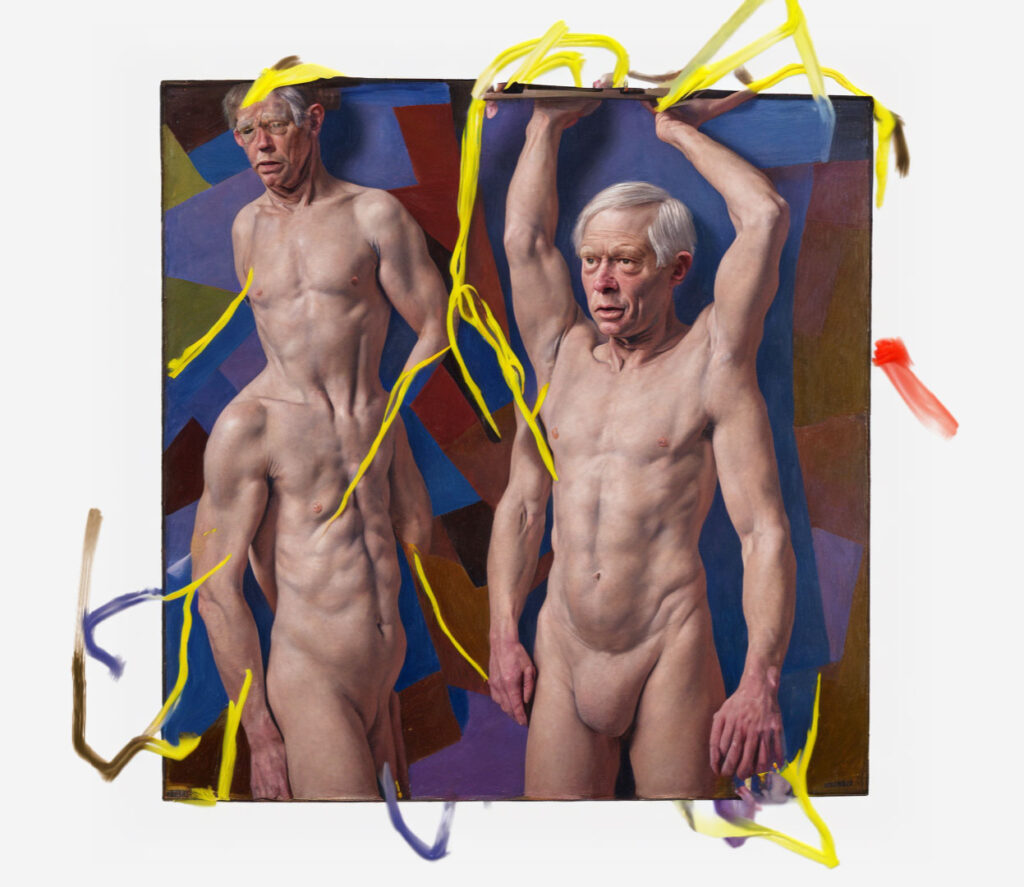

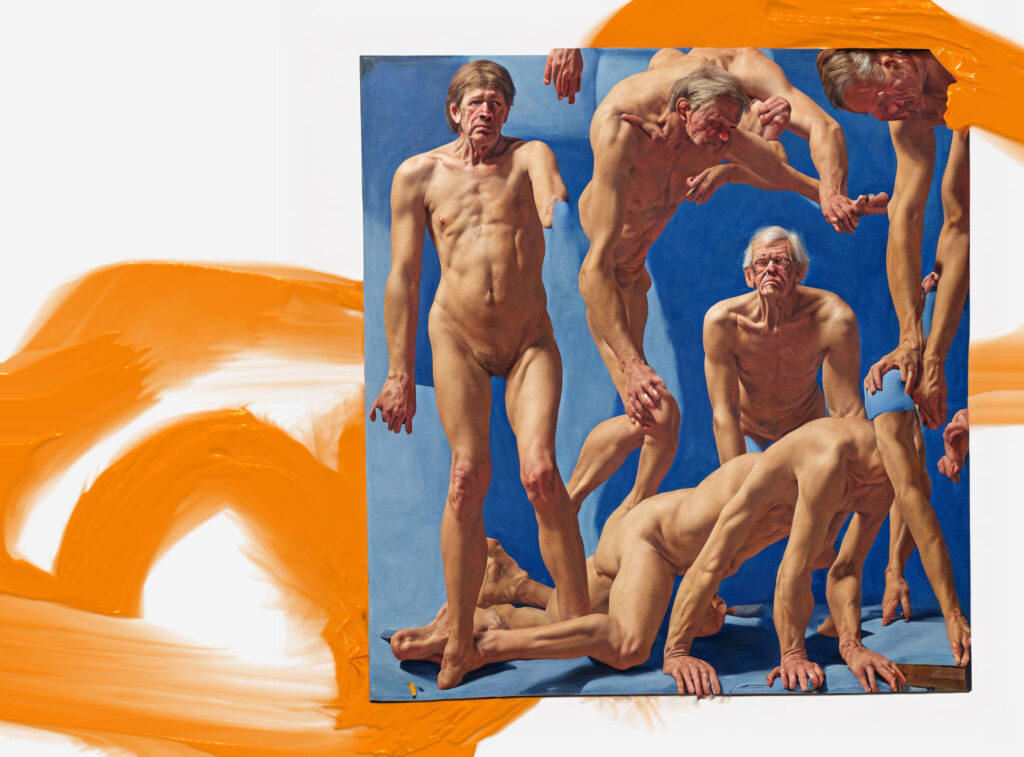

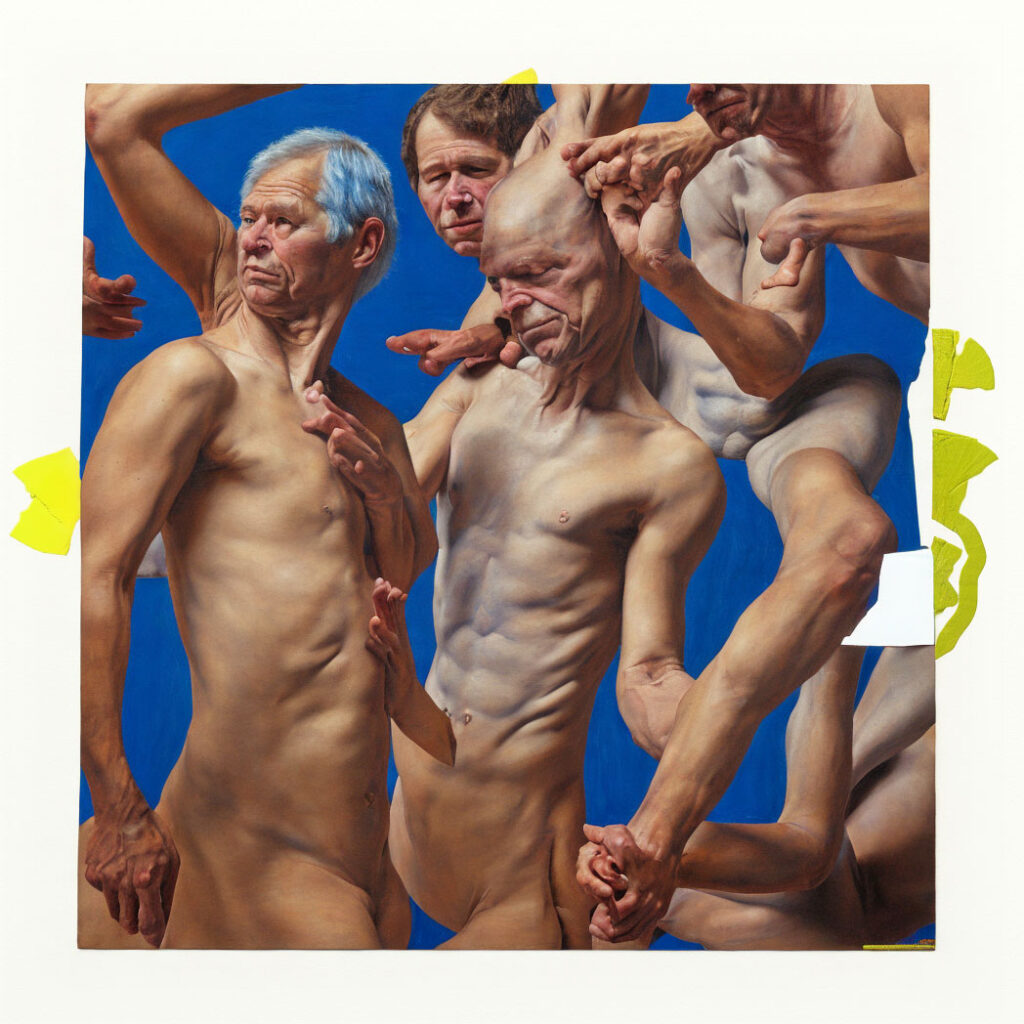

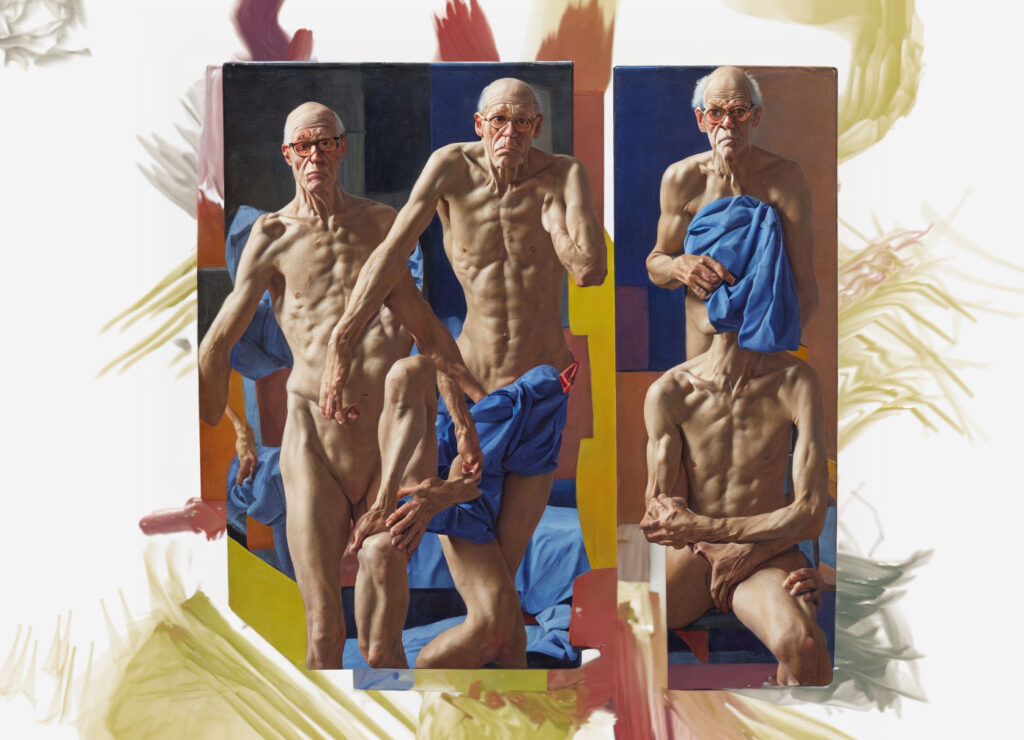

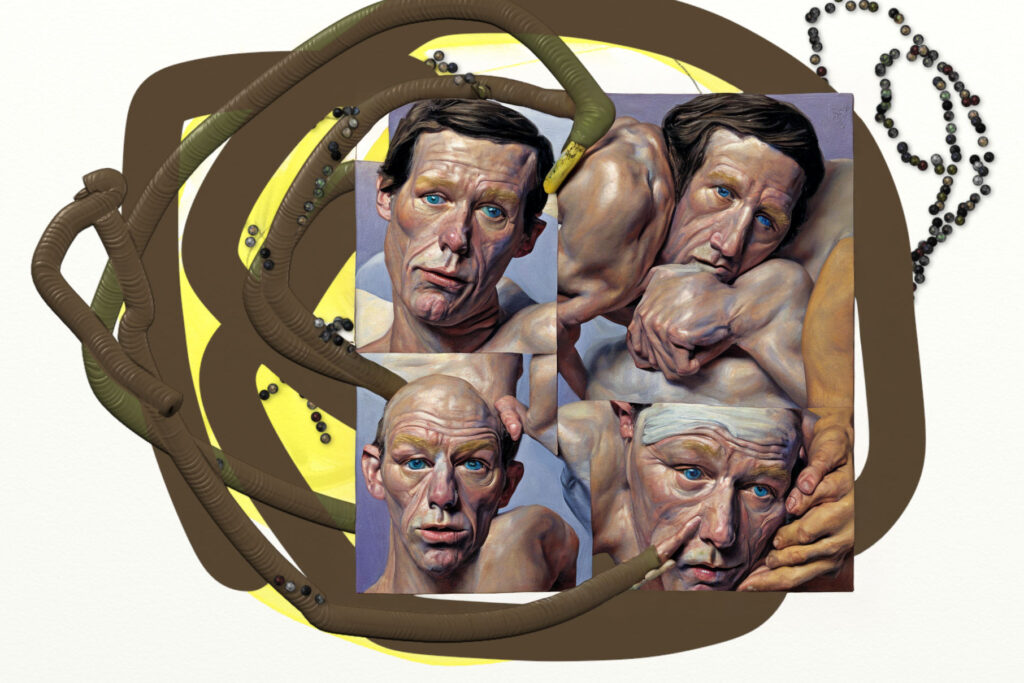

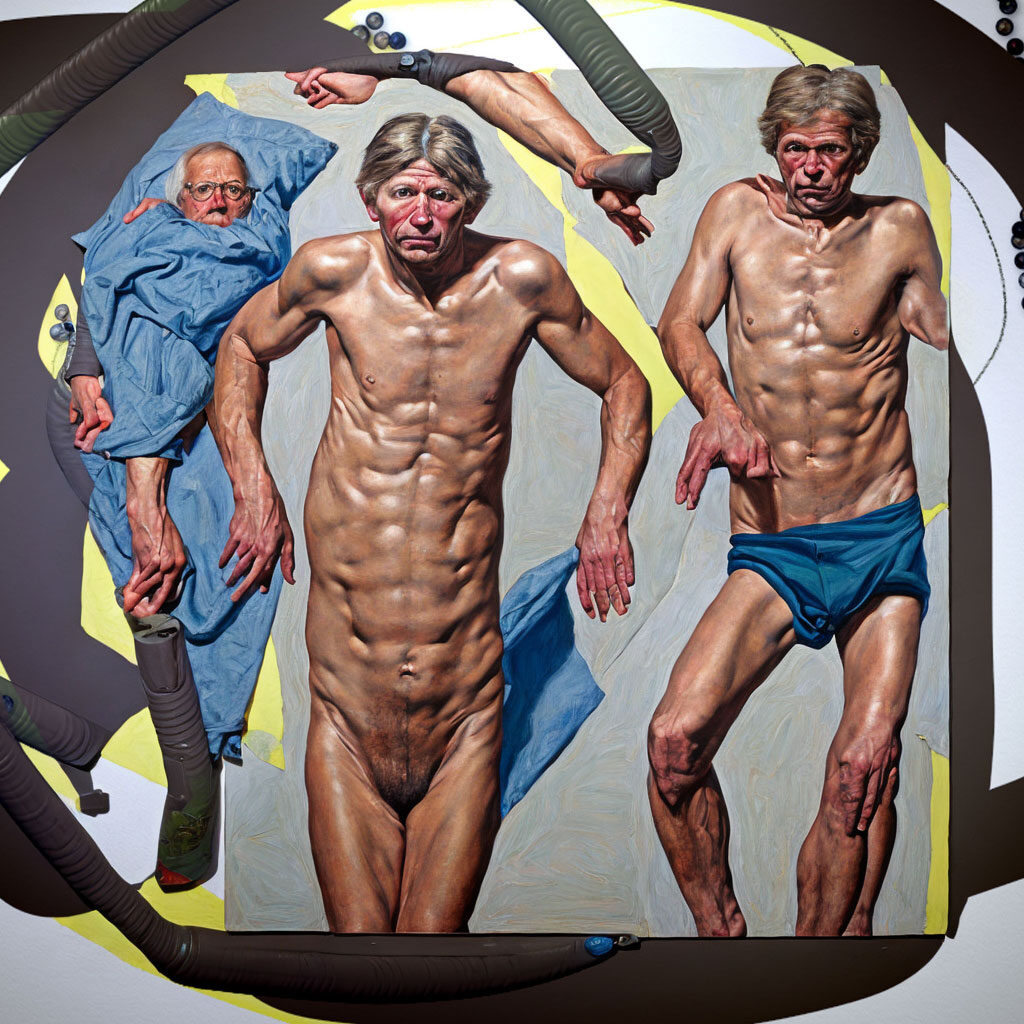

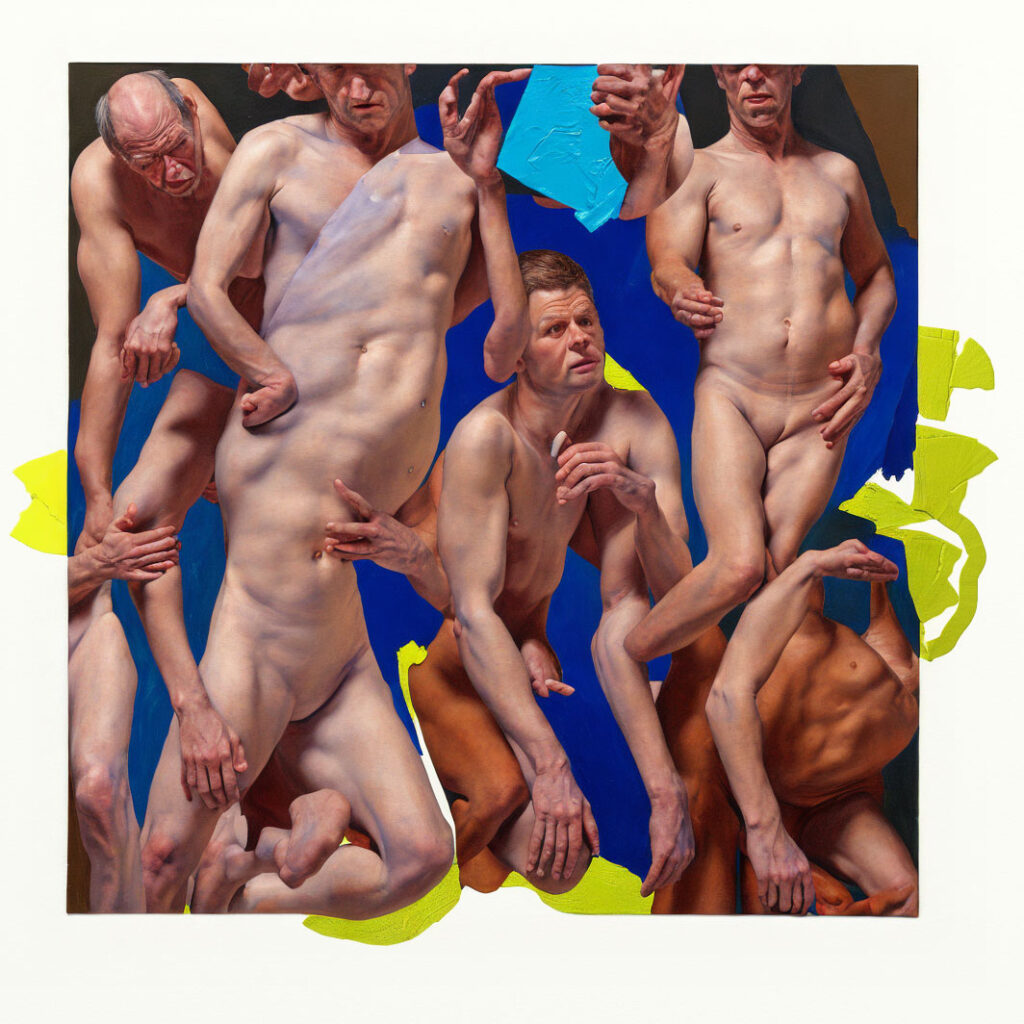

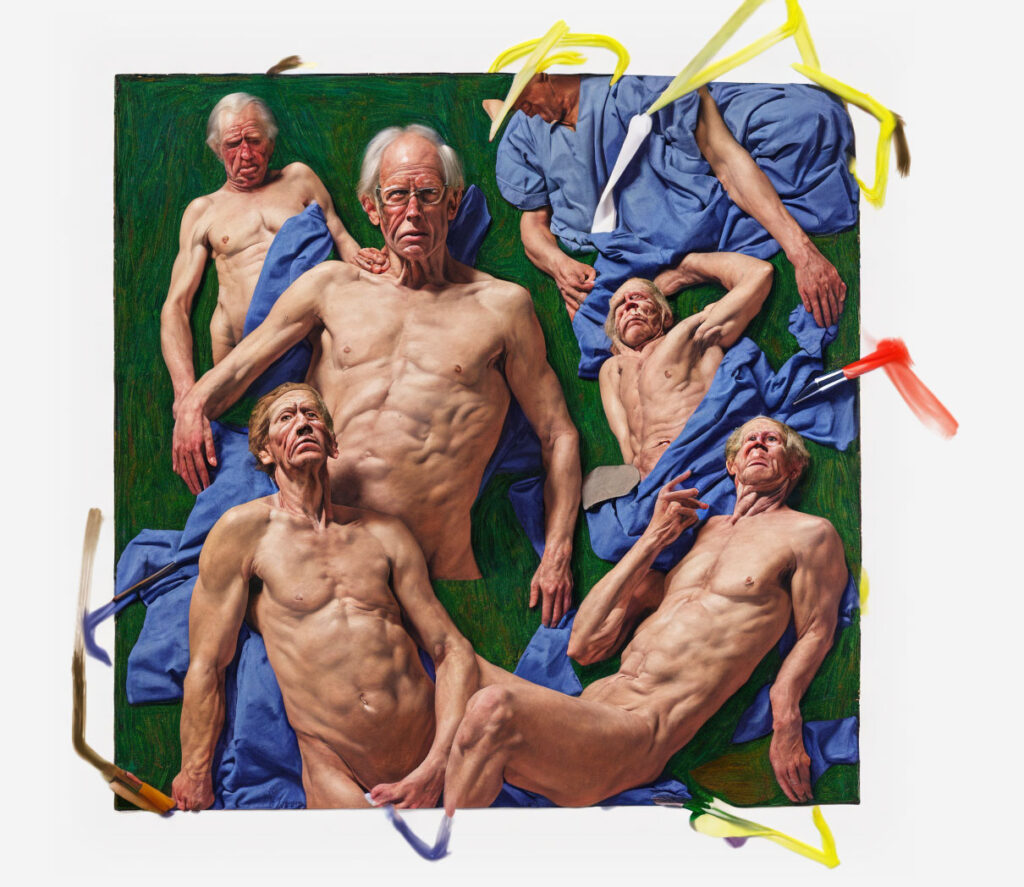

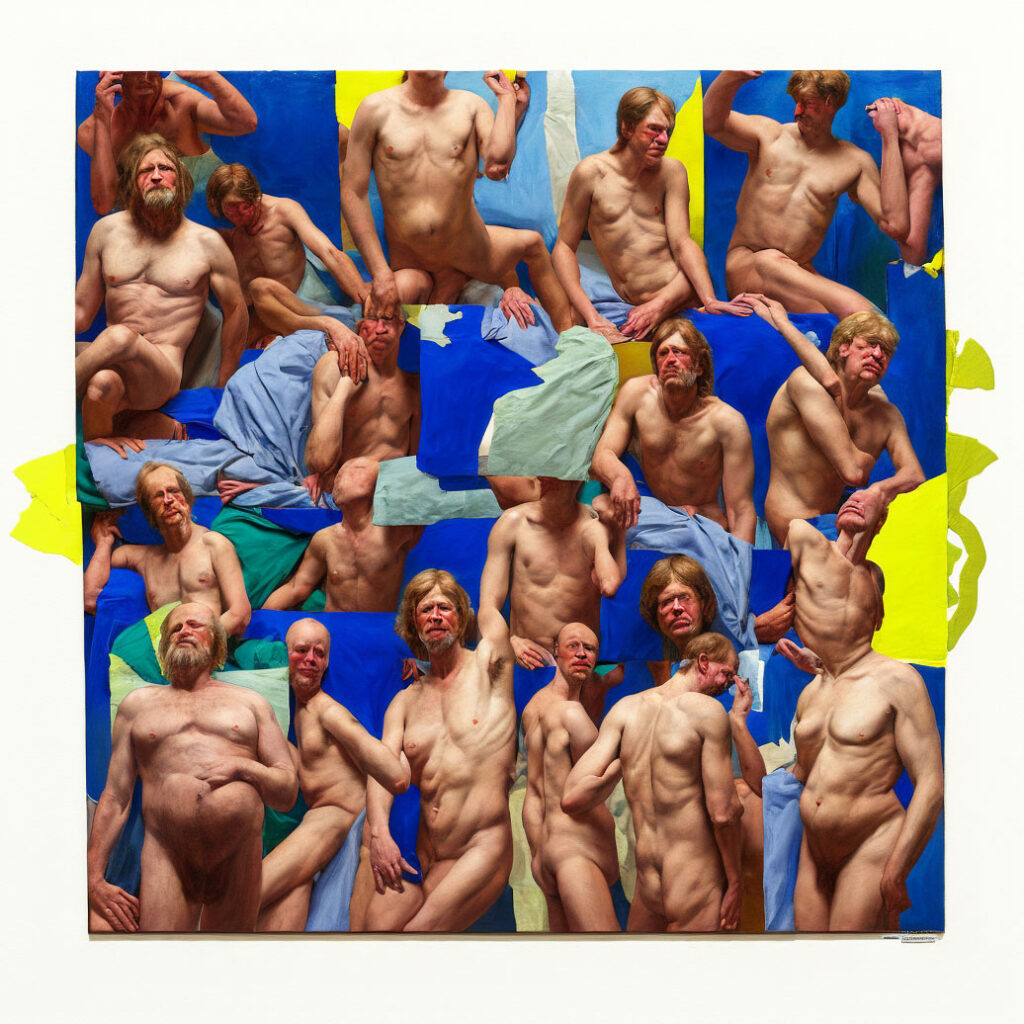

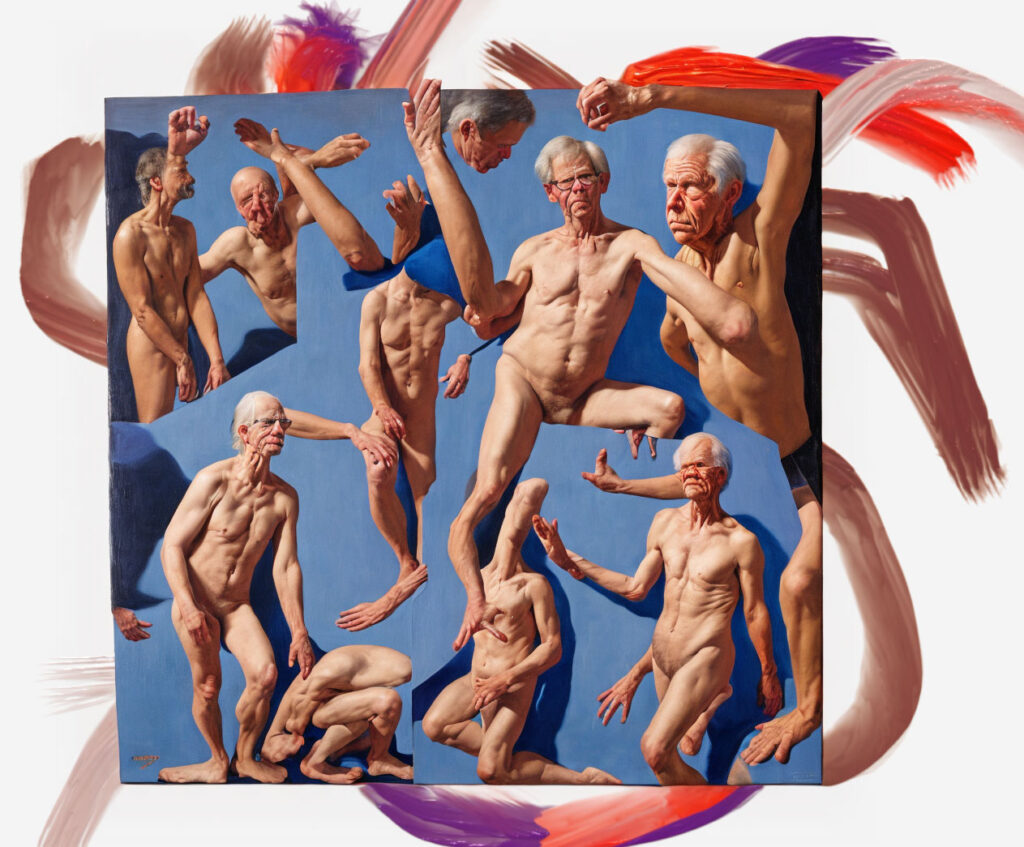

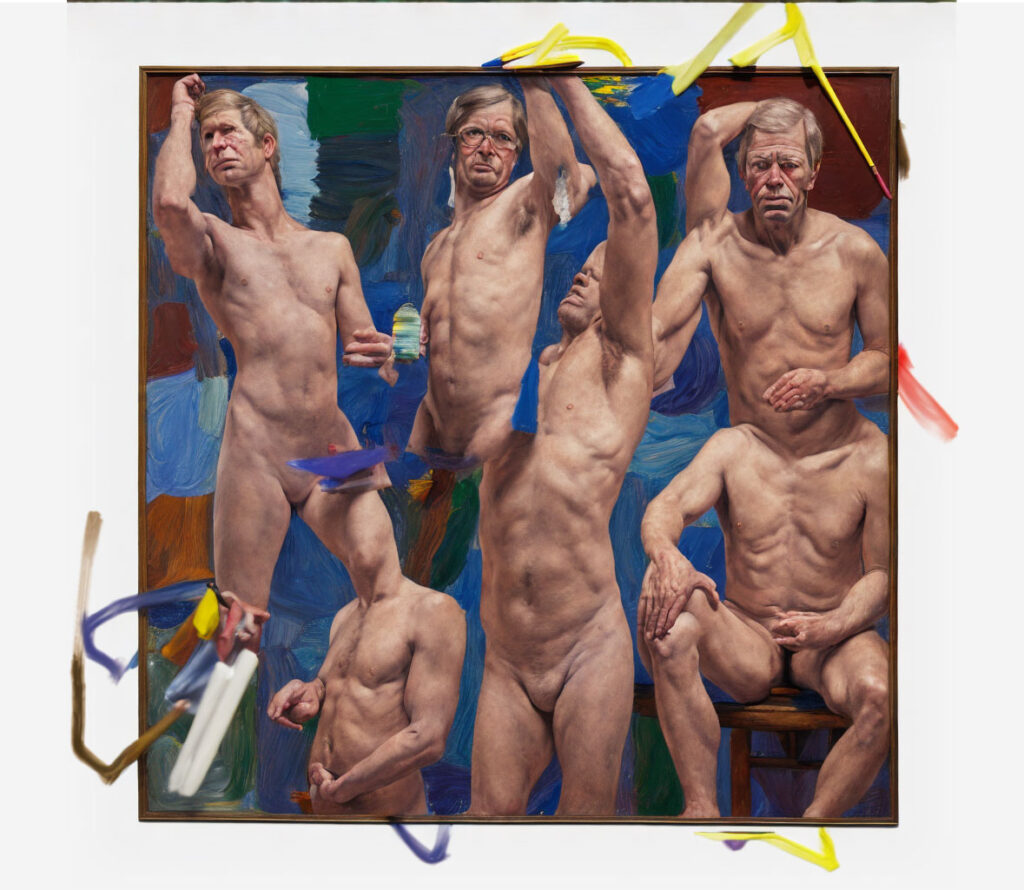

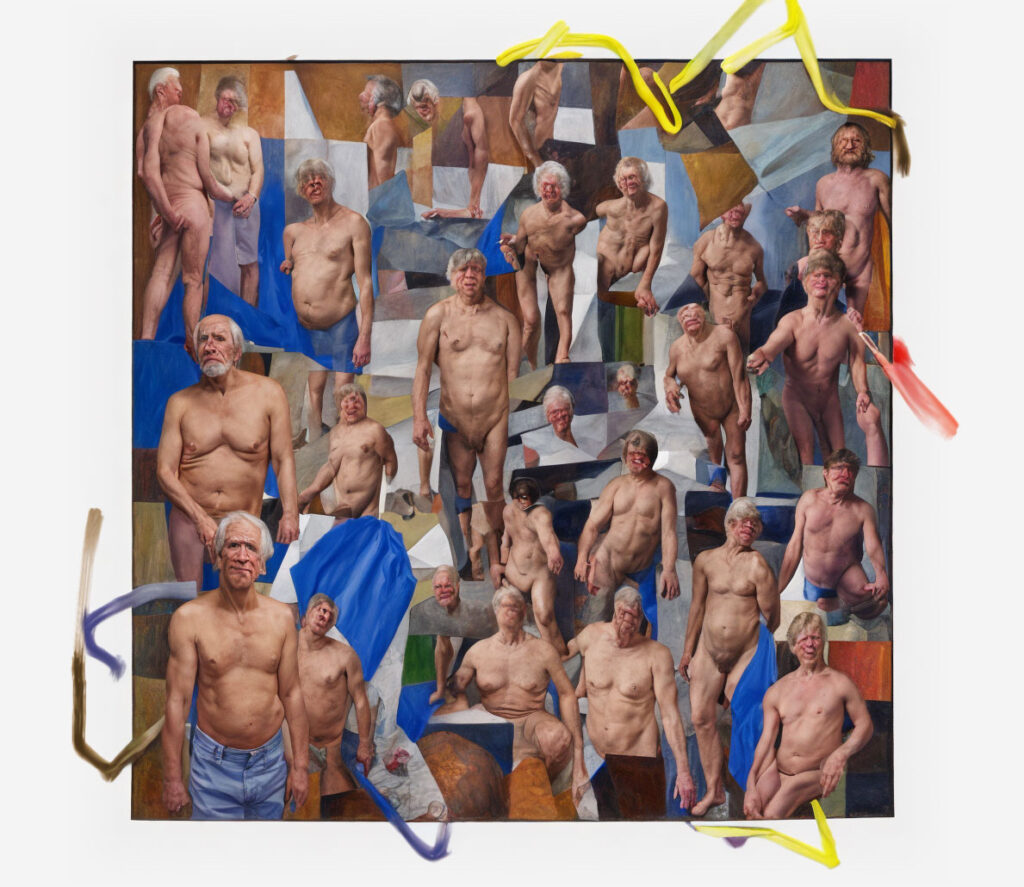

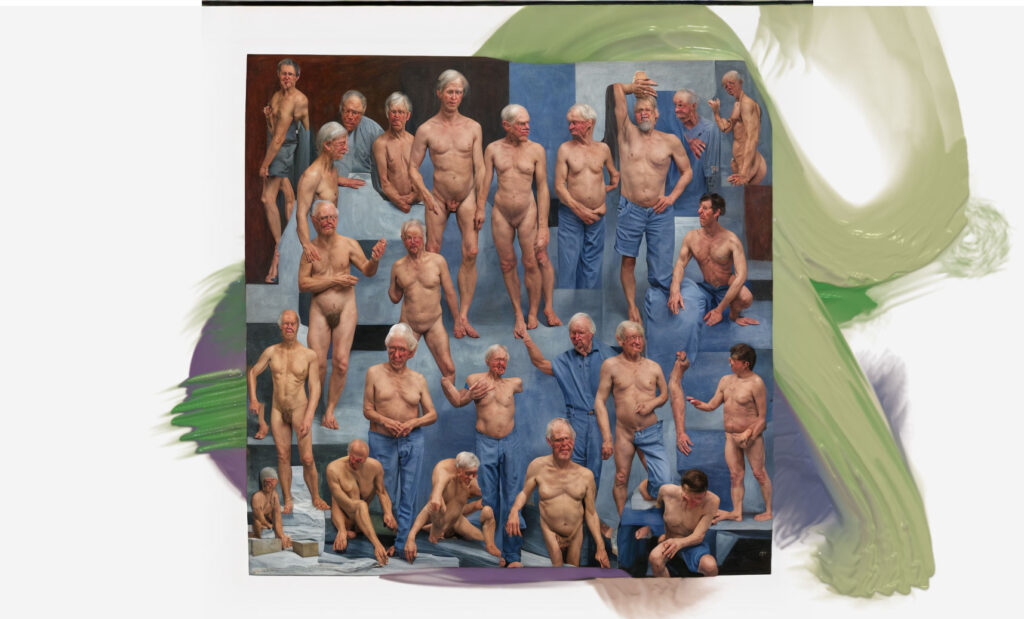

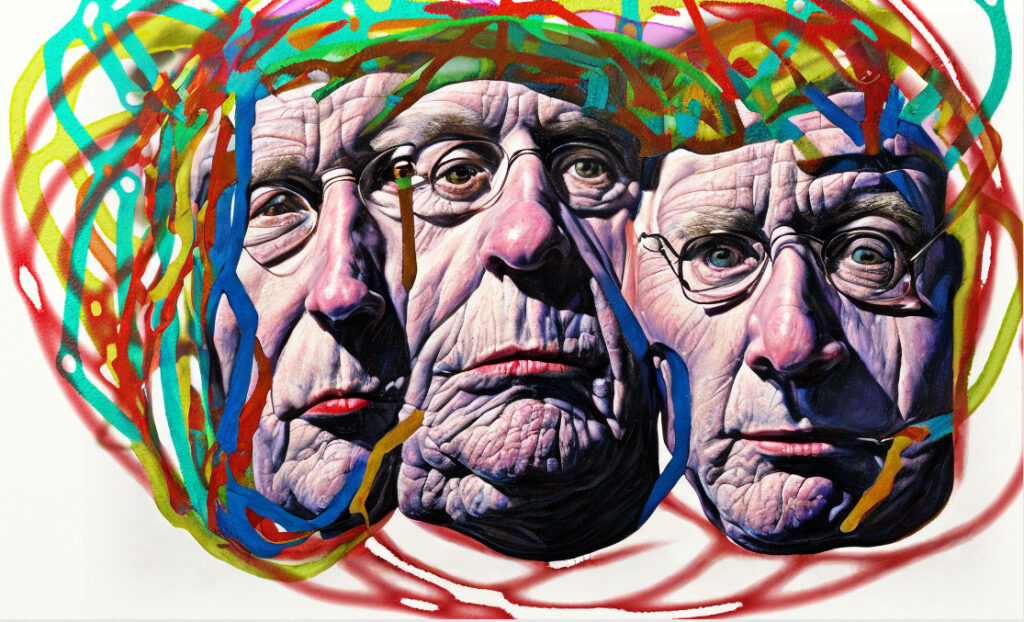

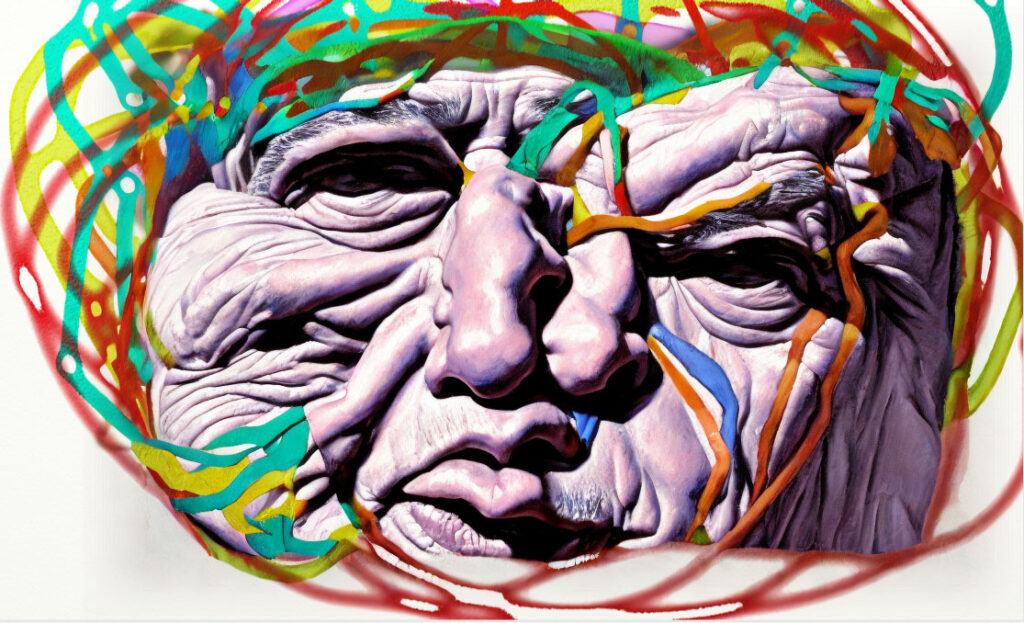

The algorithm obtained is regenerated to obtain a second variation of it.

The project is finished.

Algorithm 01- AI

PHOTO 01 + PHOTO 02 + PHOTO 03 + PHOTO 04 + PHOTO 05

Algorithm 02- AI

PHOTO 01 + PHOTO 02 + PHOTO 03 + PHOTO 04 + PHOTO 05

- Introduction:

- Begin by outlining the objective of tracing the transition of a photographic apparatus from analog to AI, covering three distinct camera technologies and their evolution alongside a language model.

- Initial Setting:

- Set the scene with the Century Number 7 camera capturing portraits in a town plaza in Argentina in 1972, highlighting its distinctive green flexible bellows.

- Camera Evolution:

- Provide a historical overview of the Century Camera Co., established in 1900 and its subsequent acquisition by Eastman Kodak Co. in 1903, leading to its discontinuation in 1920.

- First Transition:

- Transition to the iPod era, initiated by Apple Inc. in 2001, featuring portable digital audio players. Detail the iPod’s key features such as weight, storage capacity, screen size, battery life, and data transfer speed.

- Second Transition:

- Progress to the iPhone 14, the latest smartphone model available at the time. Discuss its announcement, release date, screen size, camera enhancements, and connectivity capabilities.

- Personal Connection:

- Introduce a personal connection to the narrative, mentioning the birth years of the protagonist’s grandfather (1898) and the protagonist (1963). Emphasize the generational shift from older to newer technologies.

- Storage of Old Devices:

- Describe the circumstances of the Century Number 7 camera being stored in a warehouse for 26 years before its rediscovery in 1998, highlighting the passage of time and technological advancements during its hiatus.

- Capture with Obsolete iPod:

- Explain how a photo of the Century Number 7 camera was taken using a 2009 iPod, symbolizing the convergence of traditional and modern technologies.

- Final Transition:

- Conclude with the capture of the final photo of the Century Number 7 camera using an iPhone 14, symbolizing the culmination of technological evolution from analog to digital and from physical to AI.

- Personal Reflection:

- Reflect on the fascination with both old and new technologies, and how the protagonist navigates between them using an iPhone 14 and ChatGPT, underscoring the interplay between past, present, and future in technological advancement.

This algorithm provides a structured approach to narrating the journey of technological evolution, weaving together historical context, personal connections, and the protagonist’s interaction with various devices.